HOW TO MEANINGFULLY INCORPORATE AI INTO FINANCIAL PLANNING WORKFLOWS

The goal of a financial planner is to create financial plans and understand when the company has deviated from the projections. They use a tool called what-if analysis to run potential scenarios by varying revenues and costs and seeing how it affects overall performance. From this analysis, they are able to determine the risk associated with these discrepancies.

My role on this team was to understand the workflow of a financial planner and incorporate Gen AI in an intelligent, intentional manner. This required taking time to understand the various goals of our financial modeling users and being creative about which moments AI could be game changing. Additionally, we were not allowed to use chat bots.

Clarity of Purpose

To understand causation behind a plan discrepancy, a planner may need to manually pull from as many as four different data sources: 1) EPM aggregate balances; 2) ERP transactional data; 3) FAW dashboards; and 4) OAC transactional data.

All of this data may need to be pulled and joined into excel to calculate which data points are the key drivers of the discrepancy.

As a financial planner, I need to leverage Gen AI to quickly analyze data and understand the causes driving a discrepancy between a plan and a prediction so that I know what I need to explore in order to mitigate the discrepancy.

As a financial planner, I need to leverage Gen AI to create different scenarios and understand their impact so that I can recommend the best course of action to address a discrepancy to a plan.

Context-bound, built-in assistants

Incorporating Gen AI into the workflow of a financial planner in Oracle's EPM platform has the potential to reduce the time it takes a user to action a discrepancy between a plan and a prediction from ~2 weeks to minutes. Given that the target user is extremely experienced, the key design challenge was ensuring that the AI is built in, not bolted on.

User Research

The user I am designing for is a financial analyst, specifically at an automobile manufacturing corporation. It is important to note that she is an expert user, which means that she knows how to pull information from a page and requires less overall guidance.

I was able to break down the steps a financial planner takes into key stages:

- Discover — The user becomes aware of discrepancies.

- Learn — The user understands causation behind a discrepancy.

- Explore — The user works with data to determine potential solutions.

- Take action & Monitor — The user works with stakeholders to act according to an insight.

From here, I mapped out the decision tree of a financial analyst to understand the key action points. This allowed me to simplify the user's interactions so I could create the optimal model for a financial analyst to learn and explore data.

Competitive Analysis

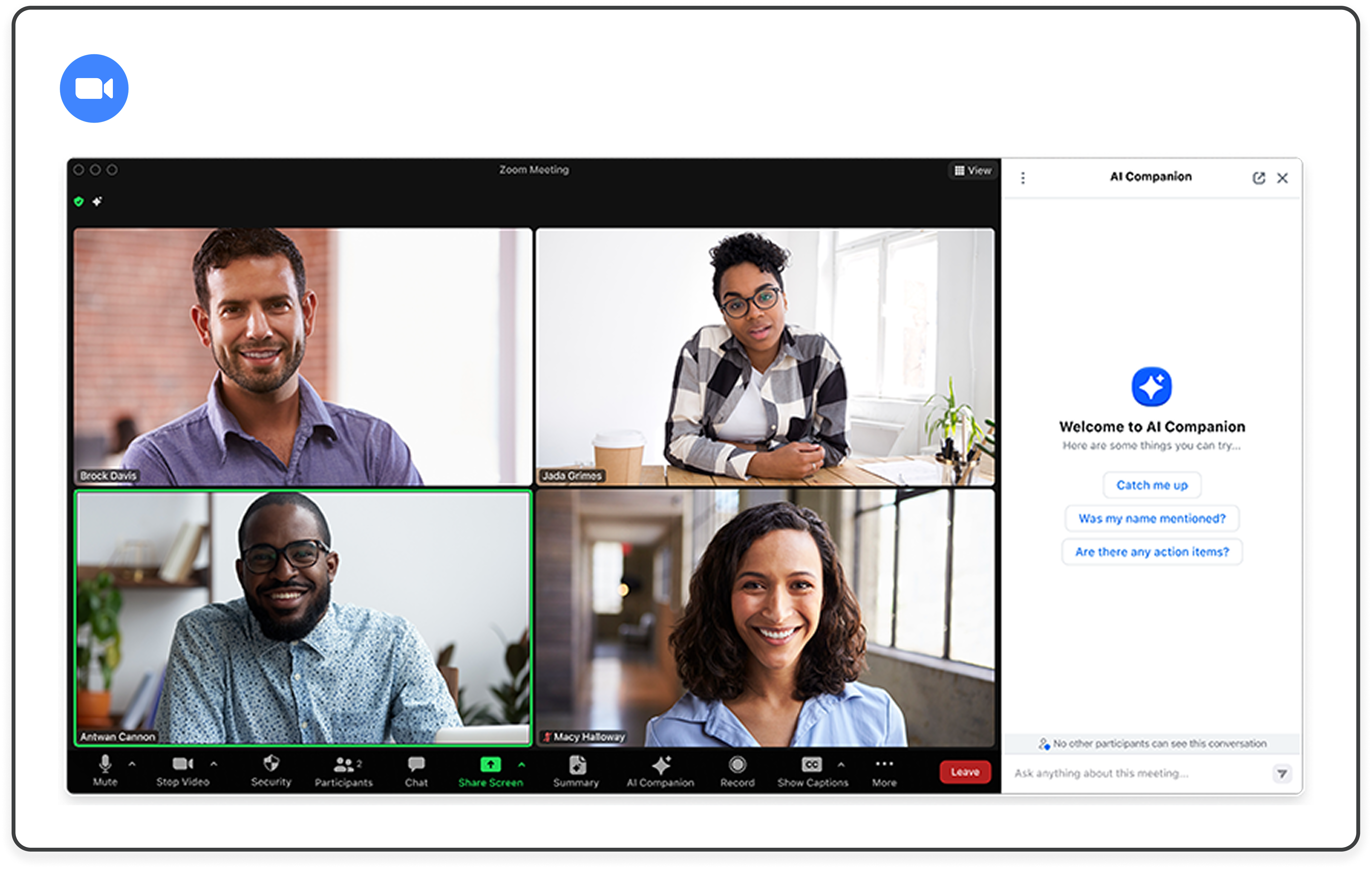

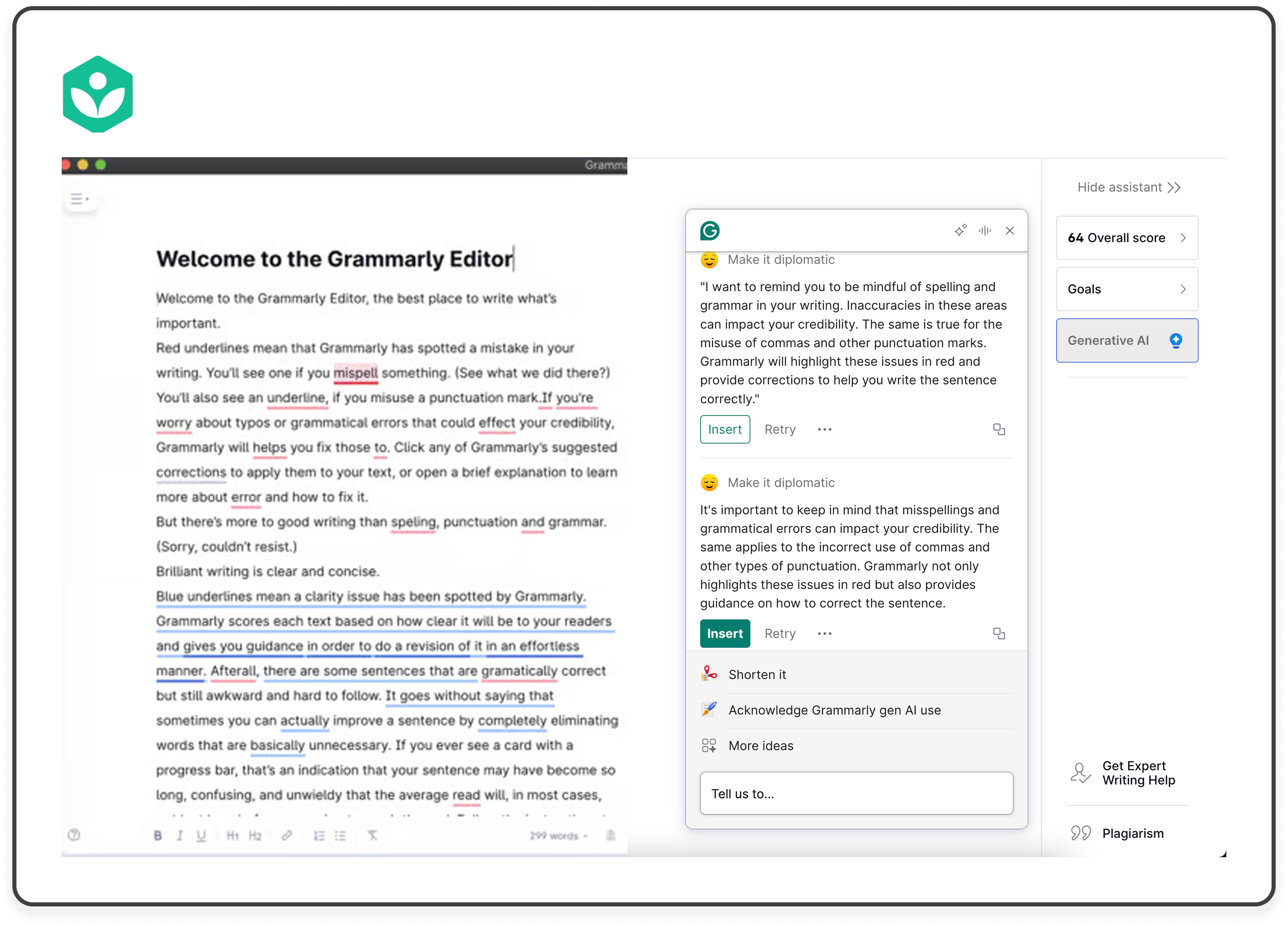

I looked into over 15 products and their AI Assistants. I then summarized each AI experience and categorized them in order to be able to properly compare them to our use case and to each other.

- Omnipresent: the product's AI assistant is meant to be used throughout the application.

- Contextual: the AI is only revealed within a particular context of the application.

- General audience: all users of the application would benefit from the AI assistant.

- Specific audience: the AI is directed towards a subset of users.

Insights

Insight #1: Omnipresent AI assistants are generally in the form of chat bots because their uses are more general.

Insight #2: AI assistants with specific use cases and specific audiences are in the form of input/output boxes.

Insight #3: Prompt suggestions are displayed to help the user understand how the AI assistant is intended to be used.

Insight #4: AI editing tools can directly insert generated content whereas more general AI tools cannot.

Design Principles

After my research, I was able to identify design principles that guided my design decisions as I began ideation:

We want to be sure to earn the trust of our professional users by allowing them to be in control of what Gen AI produces for them. We only provide suggestions when we have extremely high confidence in its relevance.

Prompts and queries are limited to what is relevant to a page. This is important so that the AI doesn't have to distinguish the user's intent.

We provide backing data to the user for them to validate any analysis that Gen AI has provided so they can be sure that the response is factual. This gives them the option to verify insights themselves, and also increases trust since the AI is effectively citing its sources.

I wanted to make sure the AI experience in my designs were differentiated and aided our user's goals in a way that was truly beneficial. I was careful to embed AI into the application, as opposed to throwing a chat bot in a side panel and expecting the user to seek it out themselves.

Leveraging Gen AI to…

Learn — help users quickly analyze data and understand the causes driving a discrepancy between a financial plan and predicted actual.

Explore — rapidly create different scenarios and understand their impact.

Design Exploration 1: Chat Bot VS. Input/Output Box

My first design exploration integrated a chat bot into the user flow because this was an extremely relevant pattern in my research.

In this exploration, the user wants to understand why the variance is high in this chart, so she asks the AI assistant which can be accessed globally. This opens a chat panel where the user can ask about the data on the page.

This concept was quickly discarded for a few reasons. The first is that according to my research, chat bots are most useful when their use cases are more general. However, my research suggested that for specific use cases, such as financial planning, input/output boxes are much more successful patterns. Another takeaway is that this chat bot doesn't feel connected to the page, which is a huge drawback for our use case where the user is constantly asking questions about the information on the page.

Design Exploration 2: Read-Only Pages

A question I had while designing was how to handle read-only pages versus pages we were modifying. The dashboard page is intended to be read-only, so I explored this option of asking questions about the content on the page in a page-level magic box, which then opened an insight panel for the AI generated response. From here, the user could expand the data in a dynamic tab and ask further questions.

The idea here was that the user would be able to see the full page on the left while interacting with the response in the right panel. However, we ultimately felt that this interaction didn't fully justify the space the panel took.

Design Exploration 3: Object-Level Magic Boxes

Within our explorations of read-only pages versus pages intended to be modified, a key distinction I explored was page level magic boxes versus object level magic boxes.

Here, the idea was that the user wanted to run a what-if analysis on this data set, which is an action that modifies the page, so they would be able to click into the data visualization and add scenarios right there in situ.

However, we realized that specifically for pages dedicated to running what-if predictions, the visualizations on the page would all be related to the same data. This made it counterintuitive for the user to click into specific data sets and ask object-level questions since the questions inherently were about all the data on the page.

Design Exploration 4: Page-Level Magic Boxes

This differs from a page-level magic box, which is demonstrated in this exploration.

Here, the user would interact with the data by dragging an empty canvas into the page, prompting the search box at the top of the page, which would then fill the empty canvas in response.

As I continued exploring the best patterns, I realized that specifically for pages dedicated to running what-if predictions, the visualizations on the page would all be related to the same data. This made it counterintuitive for the user to click into specific data sets and ask object-level questions since the questions inherently were about all the data on the page. From these explorations, we were able to narrow that pages dedicated to expanding 1 data set would benefit more from page-level magic boxes.

User Journey: Learn

In this flow, Simone, a financial analyst, returns from vacation and opens the daily flash forecast to find Q3 profit falling short of plan due to a revenue gap. Using the embedded Ask Oracle component directly within the data visualization, she asks clarifying questions about the shortfall and receives AI-generated insights with transparent data sources. As she drills down into the contributing factors, Commercial Vehicles in this case, the AI dynamically expands the analysis, helping her uncover that an over-forecasting bias caused the variance and enabling her to act on the findings within minutes instead of days.

User Journey: Explore

In this stage, Simone moves from understanding why the gap exists to exploring how to close it. She asks the AI for recommendations to meet her revenue target, fully guiding the interaction herself. The AI responds with potential strategies and opens a dynamic tab where she can test what-if scenarios directly in the interface.

Since she's adjusting a single data source, this uses a page-level magic box that lets her add or edit scenarios inline without breaking focus. Curious about marketing's impact, Simone runs a goal-seeking analysis to see how much additional ad spend it would take to reach plan. The AI calculates roughly $350M, which she saves and shares with her team to discuss next steps.

Full Walkthrough

Key Takeaways

This internship required me to learn a lot about financial planning and its tools. Designing for an entirely new user group was a challenging but extremely rewarding experience. I had to pivot design ideas many times, but this experience ended up being extremely rewarding because I got closer to my final designs with every iteration. Another key takeaway was working within Oracle's Redwood Design System. It was challenging to fit my ideas within Redwood page templates. This summer has allowed me to improve as a storyteller and overall designer.

My greatest takeaway from this experience was designing for financial planners, a user group completely unknown to me. This forced me to deeply understand a new user journey so I could design an AI experience that was built in, not bolted on.