Fine tuning the music listening experience with AI-powered audio profiles

This project was created in a class dedicated to designing for accessibility. We were given no limitations on the type of user we are designing for, the problem we are hoping to design a solution for, or the power of the technology within the platform we create. The only nonnegotiable criteria was that the platform utilizes the power of AI.

Create a platform that utilizes AI to improve the live music listening experience for members of the Deaf or Hard-of-Hearing (DHH) community

Even though hearing loss differs across people, there's a common need to tailor the music listening experience to one's favored frequencies in an efficient and socially acceptable manner. We wanted to utilize AI to quickly and reactively create customized audio equalizer profiles based on a listener's hearing preferences and context.

Understanding the DHH community's relationship with music

We conducted in-depth interviews with three participants to understand how members of the Deaf and Hard-of-Hearing community experience music. Each participant brought unique perspectives that shaped our design direction.

We met:

Person #1: T

Biomedical Informatics (BMI) graduate student @ Stanford

Received hearing aids at age 3

Person #2: O

Artist and Musician

Received Cochlear Implant 5 years ago

Person #3: D

Ear, Nose, and Throat Doctor

Works extensively with DHH patients

Key Questions That Guided Us:

Hearing

• How would you describe your level of hearing?

• Tell me about a memorable time that you faced a challenge due to your hearing

Music

• How would you describe your experience/relationship with music?

• What is important to you about listening to music?

Needs

• Tell me about a time where you found it challenging to listen to music

• What resources are important for you in regards to music?

Music Sharing

• Do you ever share a music experience together with your parents or other DHH people?

• What's important to you about sharing music experiences together?

What we learned

How might we utilize AI to quickly and reactively create customized audio equalizer profiles based on a listener's hearing preferences and context?

Exploring 5 Concepts

We explored multiple approaches to improve the music listening experience for the DHH community. Each concept leveraged AI in different ways to address the challenges we discovered through needfinding.

Concept 1: Audio Descriptions

Automatically generated audio descriptions that accompany song lyrics. Currently many streaming platforms provide lyrics to their songs, but these are limited to only what is being sung. AI-generated audio descriptions could convey the tone of a song, volume or tempo changes, instrumentation, and other musical elements that lyrics alone cannot capture.

Concept 2: ASL Interpretation

ASL interpretation as a supplement to the current music streaming experience. While lyrics may be helpful for understanding what is being sung, ASL interpretation offers another layer of meaning and can convey emotion and tone that lyrics alone cannot. Users fluent in ASL could upload song interpretations that other users can view while listening.

Concept 3: AI-Generated Audio Profiles (Selected)

Users upload their audiogram and AI automatically determines an initial audio profile. From there, users can customize audio settings around frequencies, noise suppression, voice amplification, etc. Based on this initial screening, a series of more nuanced preset profiles intended for music are created automatically.

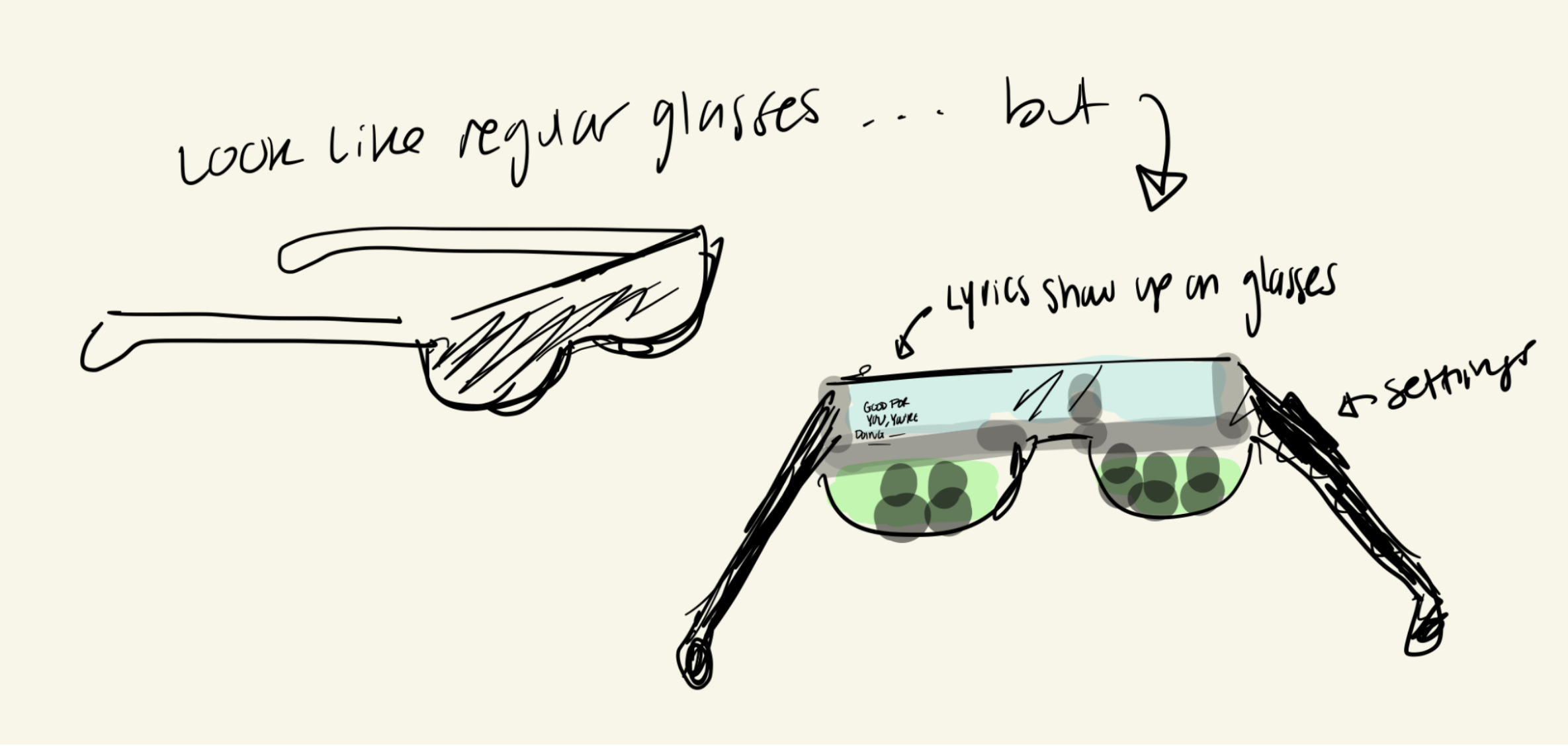

Concept 4: Lyrics Display Glasses

Smart glasses that translate audio into lyrics in real-time. Based on our needfinding insight that some people who are deaf deeply enjoy the lyrical component to music, these glasses would allow users to follow along to lyrics without having to look at their phone at a concert, which has low social acceptability.

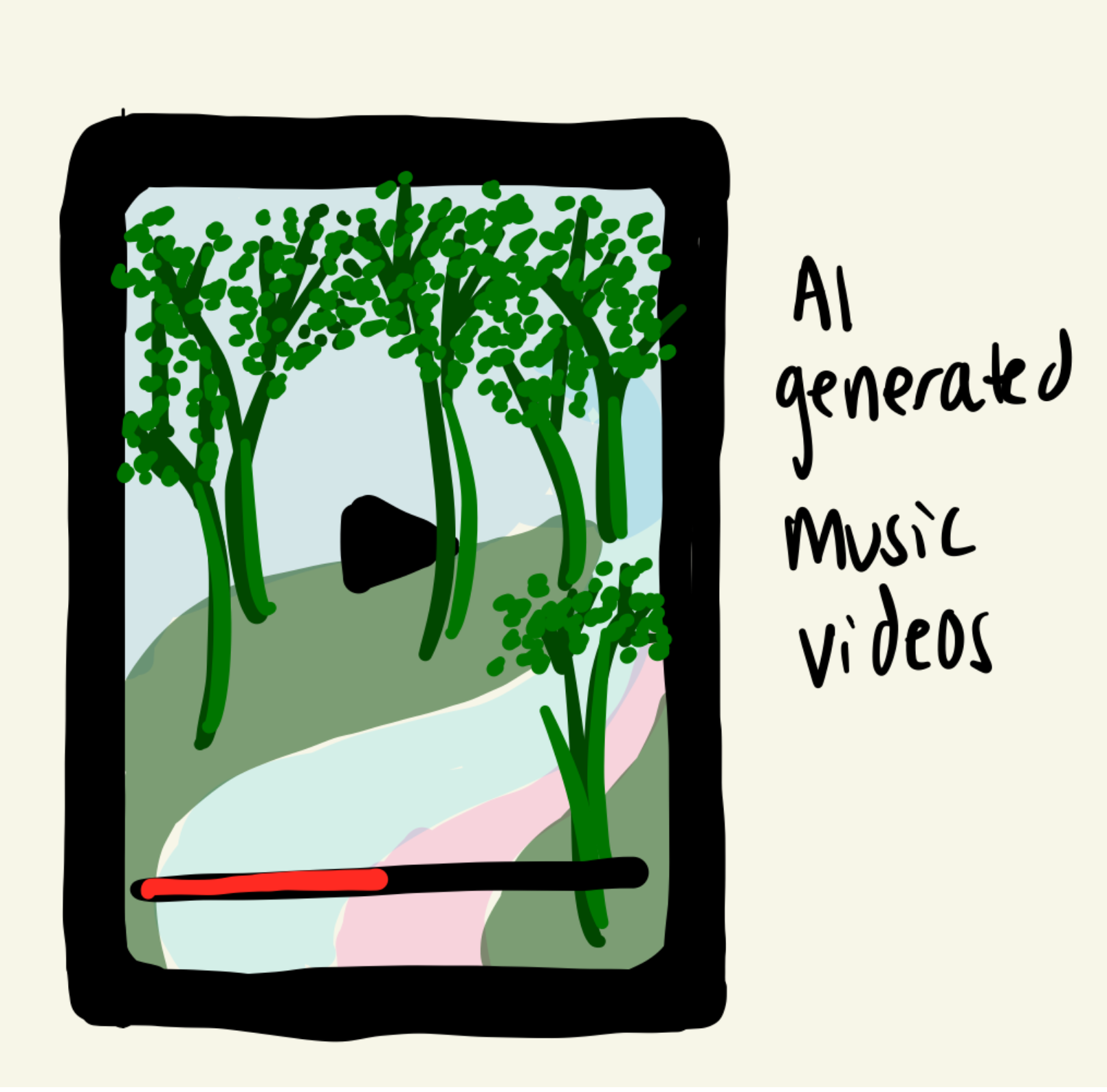

Concept 5: AI-Generated Music Videos

Another insight we learned is that some people really enjoy music videos which inspire a range of feelings alongside lyrics. AI-generated music videos based on lyrics could enhance the experience, especially since many songs don't have accompanying music videos.

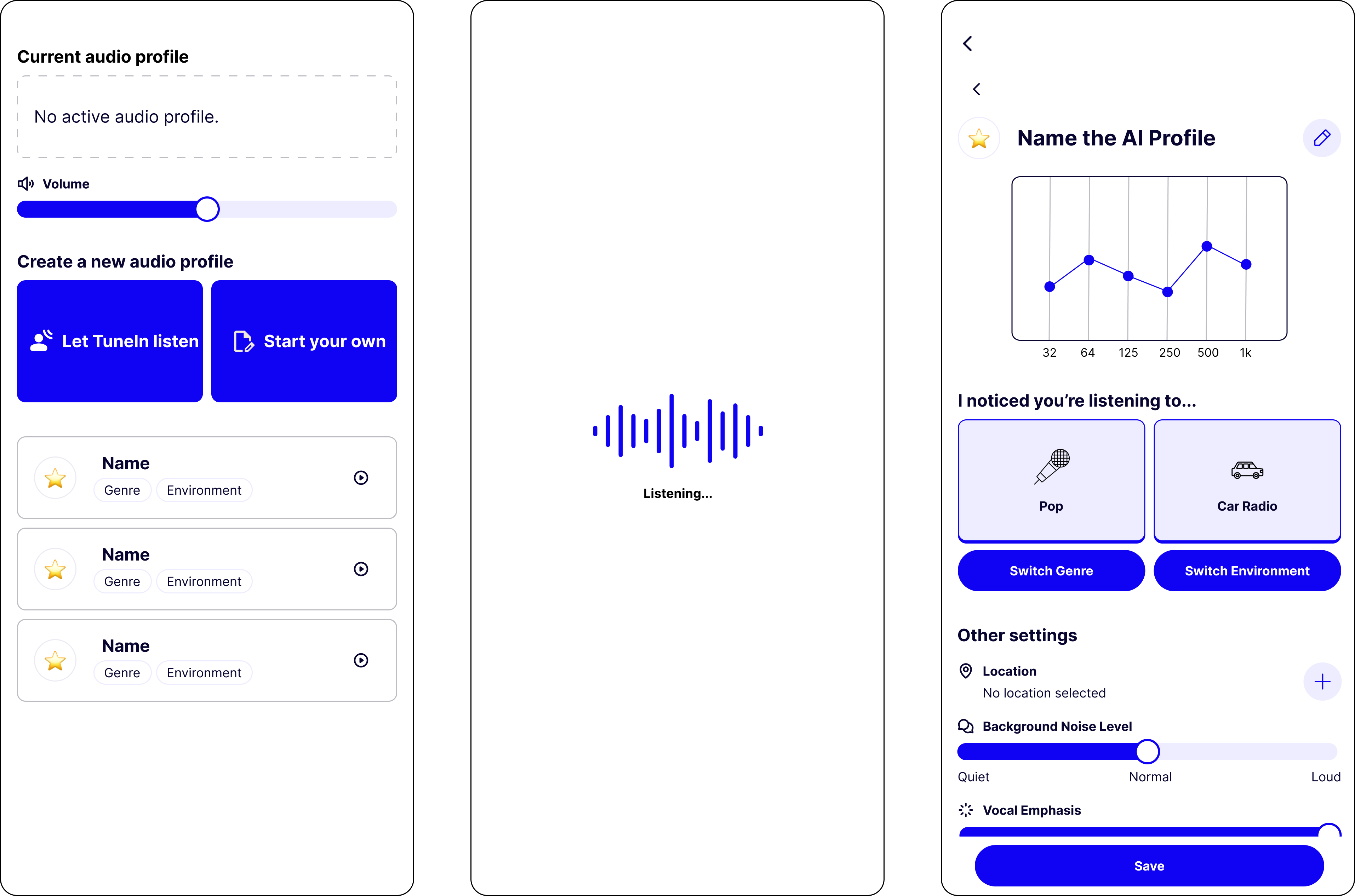

Building the Core Experience

Our solution focuses on three main tasks that work together to create a seamless, AI-powered audio customization experience:

1. Upload Audiogram

Users upload their audiogram and complete an initial hearing assessment to establish baseline hearing preferences.

2. AI Auto-Generation

AI automatically generates custom equalizer settings based on the context of the music being played—the core feature of our prototype.

3. Manual Customization

Users can create and adjust custom settings from scratch for complete control over their audio experience.

Our core user task employs an AI assistant that detects information such as the genre/instrumentation of music, volume levels, and environmental context (e.g. whether the user is streaming music through headphones or at an outdoor concert) in order to optimize the user's listening experience by quickly and automatically adjusting the settings of one's assistive hearing devices.

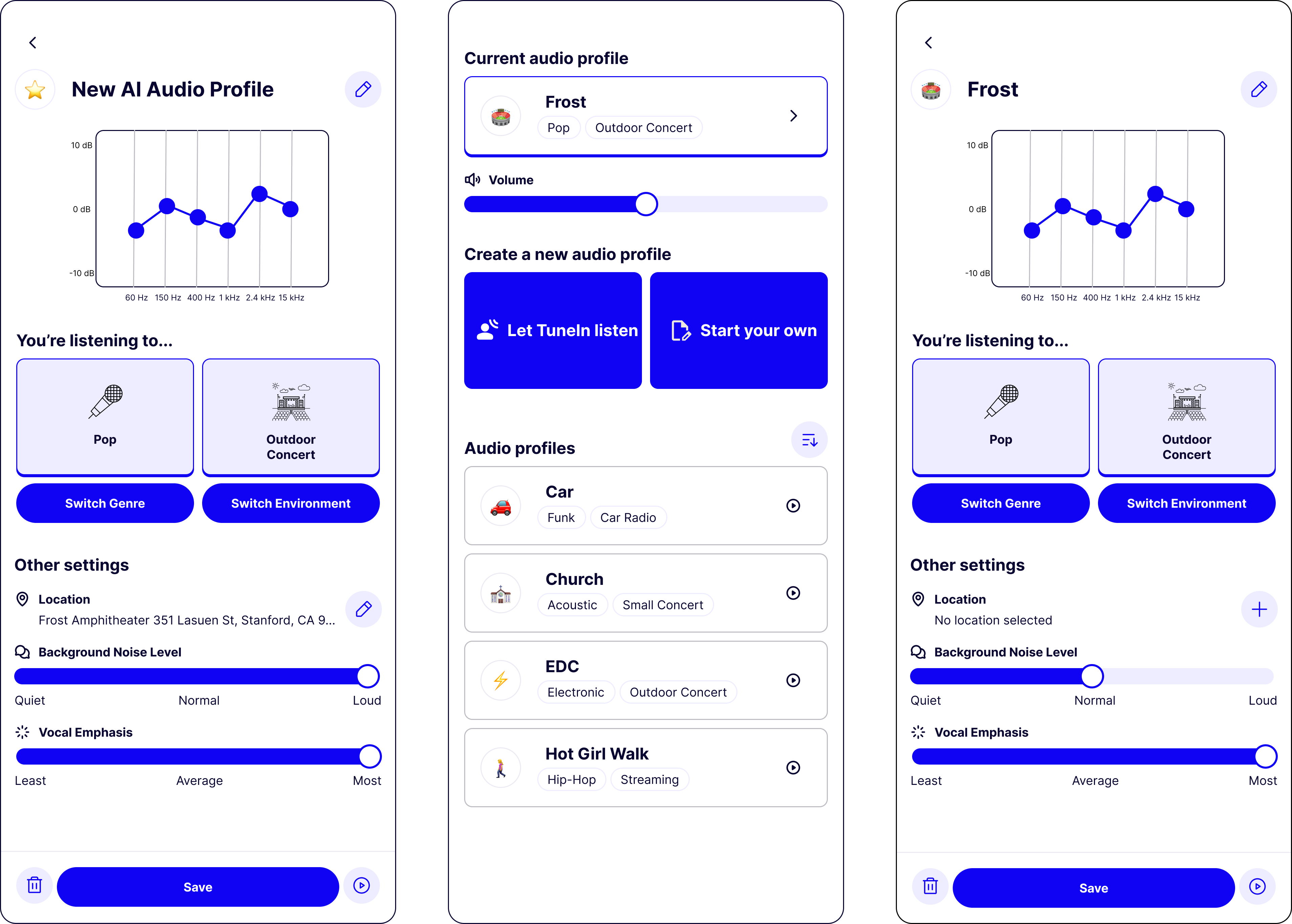

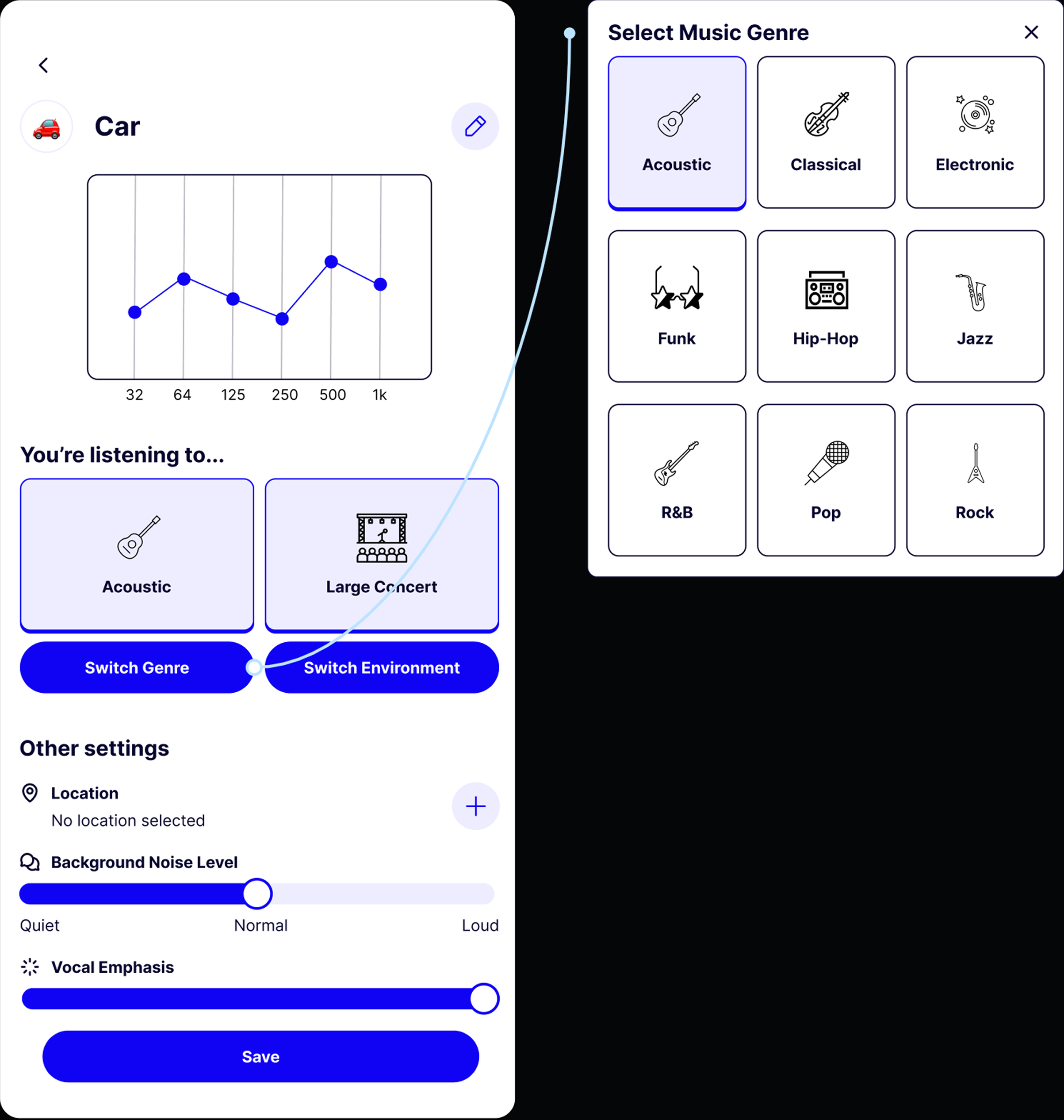

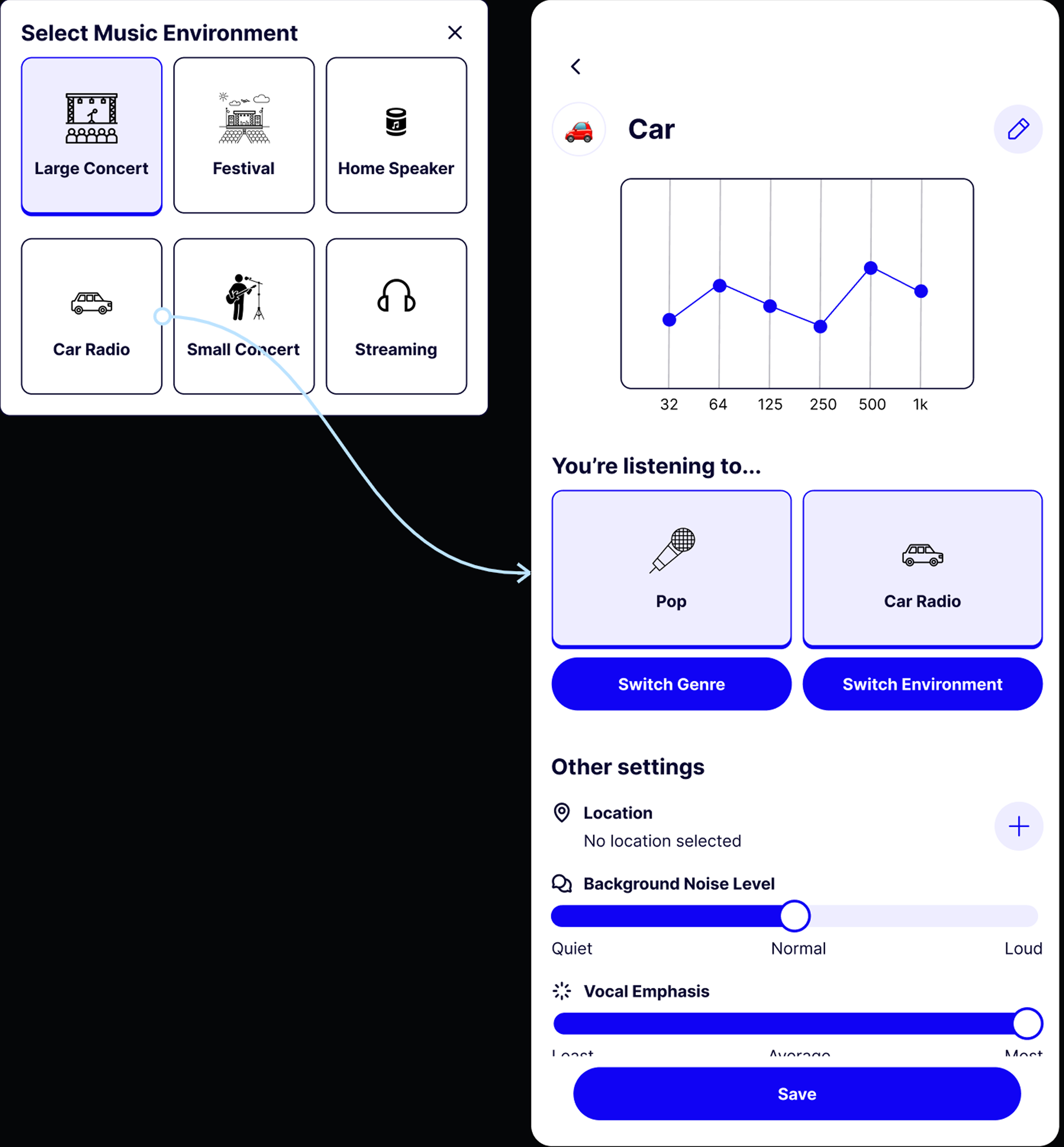

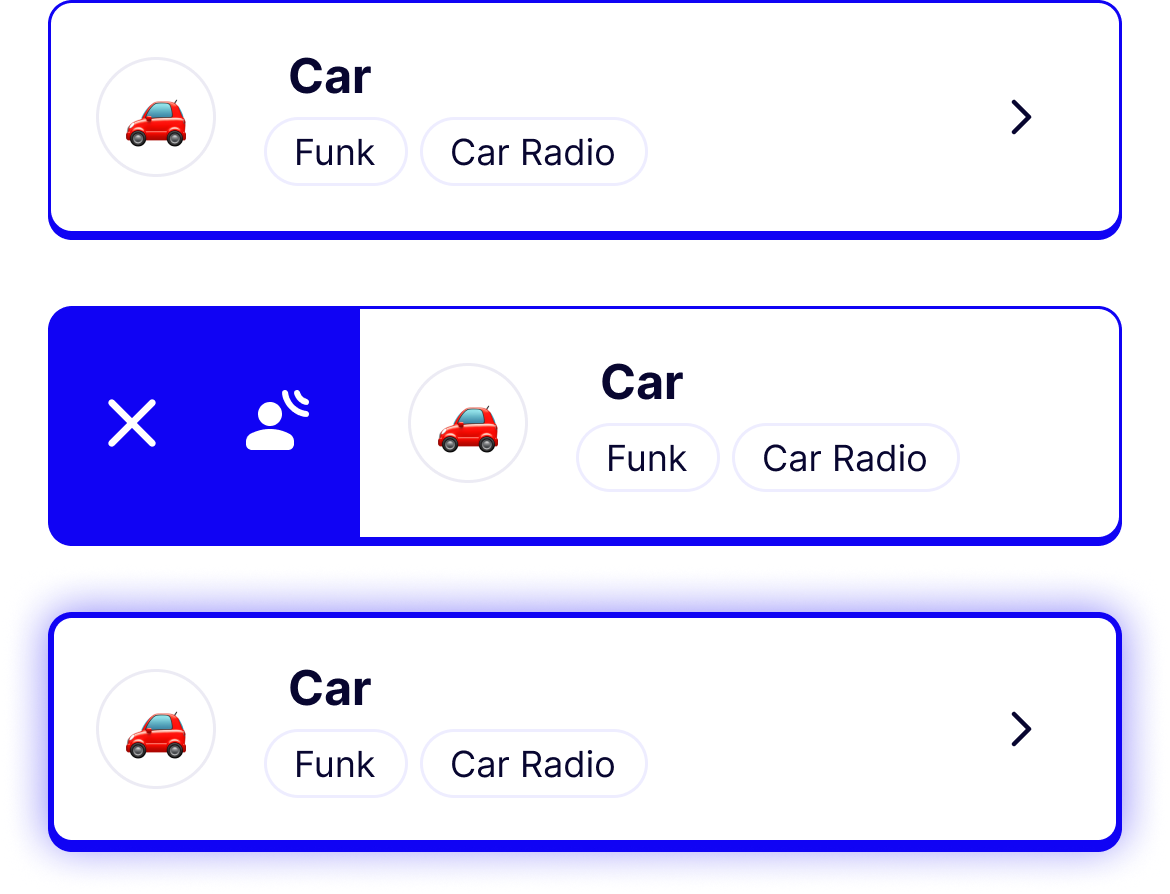

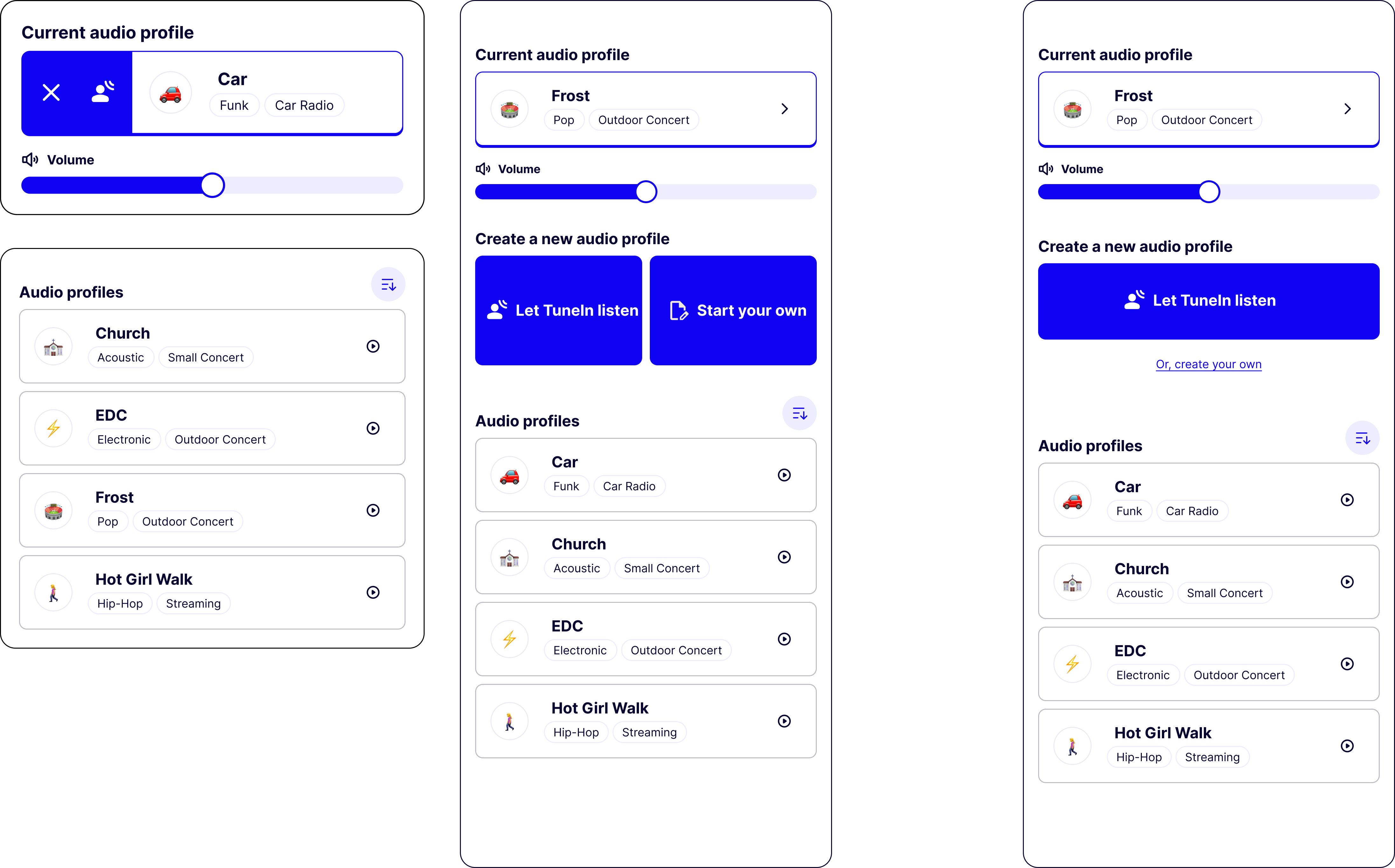

Design Evolution

We included the genre and environment of each audio profile on the home screen so users can quickly select the appropriate settings for their environment. Originally, audio profiles were simply displayed by their icon and title. However, we envisioned audio profiles being created for very specific genre/environmental settings, therefore we updated the home screen to reflect the importance of these two contextual settings.

The user is able to select from a wide variety of genres and environments. We included comprehensive customization options including frequency adjustments, noise suppression, voice amplification, and more to ensure accurate personalization for each listening context.

Refining Based on Feedback

After initial testing, we made significant improvements to enhance both functionality and user experience. Here are the key revisions:

🔊 Always Listening Mode

We added an "always listening mode" where TuneIn continuously gathers data about the environment via the phone's microphone and then alters the current audio profile. This ensures the app is reactive to changes in one's environment. Users can swipe to quickly activate TuneIn AI's help, making the experience seamless and automatic.

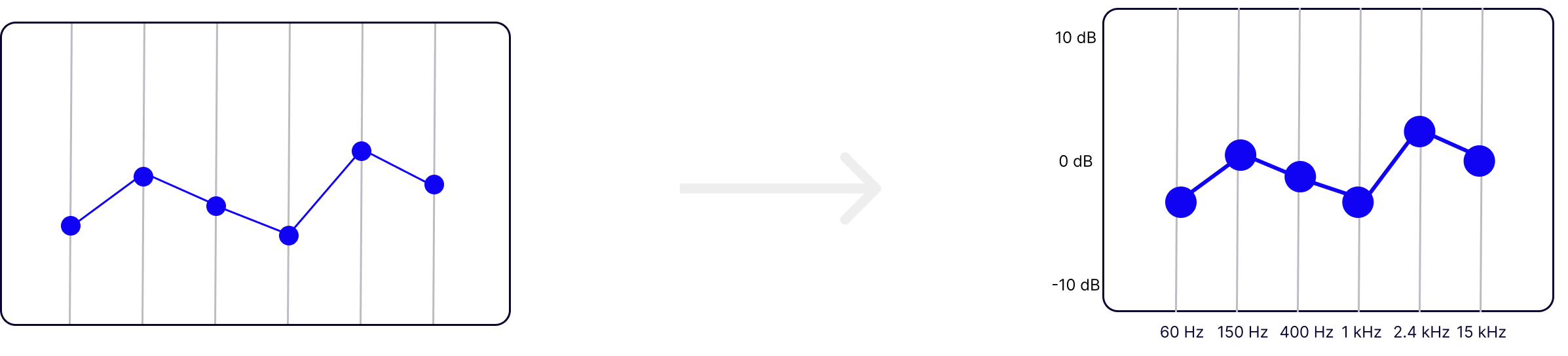

🎛️ Enhanced Audio Adjustments

The equalizer was extended from 1kHz to 15kHz to match traditional equalizer settings. While many instruments exist in the range of 200-600 Hz, cymbals, guitar effects, and some vocals often go beyond this range. We also added "Environment Base Volume" and other fine-grained audio adjustment options to give users more control over their listening experience.

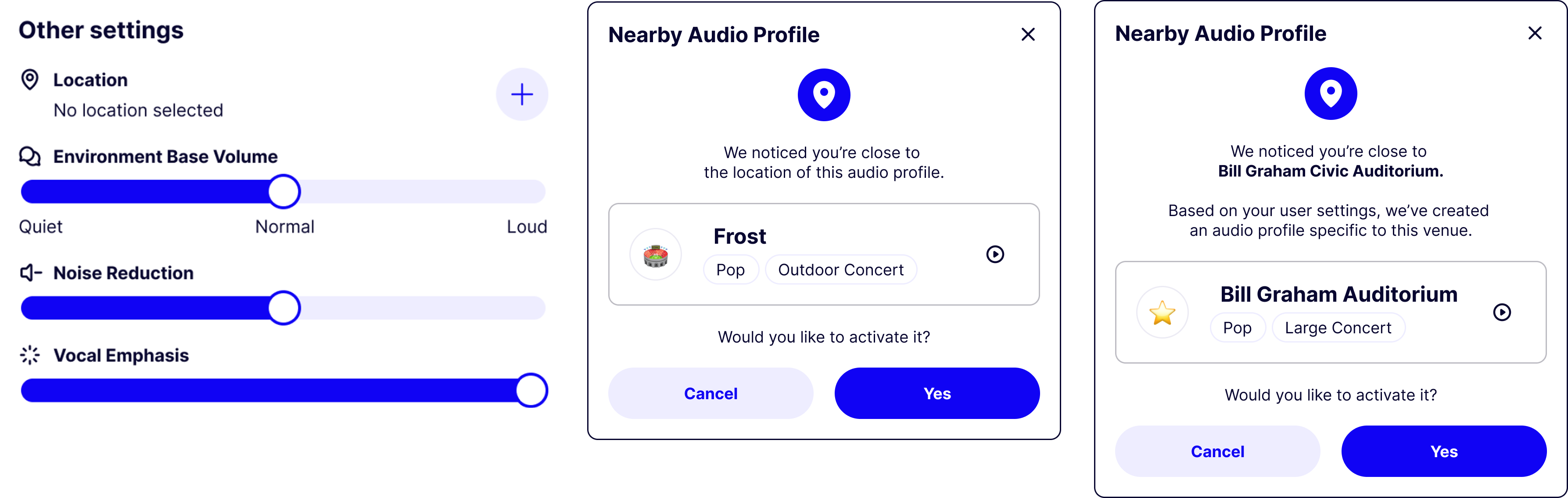

📍 Automated Location Settings

We added the option to set a location for an audio profile in addition to the environment option. Because of acoustic differences between different concert venues, one's home, the outdoors, etc., location is another important contextual consideration. The app can now prompt users with suggested audio profiles based on their location, reducing friction when finding the right profile.

📲 UI Improvements

We added more labels with informative icons and adjusted the UI to emphasize the "Create a new audio profile" CTAs. We added action buttons for sorting audio profiles and quickly removing active profiles. Most importantly, we redesigned the "Let TuneIn listen" button to be more prominent, guiding users toward the AI feature rather than manual entry.

Testing with Real Users

We conducted comprehensive user testing with members of the DHH community via Zoom, giving participants virtual access to our Figma prototype. We focused on three key tasks:

Create

"You're at a concert at Frost. How might you create a new audio profile for this scenario?"

Select

"You've just arrived at Bill Graham Civic Auditorium. How might you select an audio profile for this scenario?"

Adjust

"You're in your car listening to a funk song. How might you adjust the audio profile when the playlist switches to pop?"

What Users Loved

Critical Feedback

Key Insights

TuneIn AI is an improvement over current solutions

Both participants opted for the AI-generated audio profiles as opposed to creating them from scratch. Current solutions are "good enough" but this prototype is "great". Overall, participants were excited about this solution!

Balance between simplicity & customization

Current solutions take a lot of time & difficulty to find optimal settings. Our solution fills this gap & empowers users to edit settings efficiently. We must be careful to not cross this balance by adding too much complexity.

Consider accessibility for older users

The typical person with hearing loss is older & likely less tech-savvy. Our design must account for this demographic while still providing powerful features for those who want them.

Revisions — Simplify & Customize

Based on user feedback, we made targeted improvements to streamline the experience while maintaining powerful customization options. These changes focused on reducing friction and guiding users toward AI-powered features.

Three Core Flows

Based on user feedback, we simplified the experience while maintaining the powerful customization options users need. The final design focuses on three key flows that make audio profile management effortless.

Create

Users can quickly create new audio profiles by selecting a genre and environment. The AI immediately generates optimized equalizer settings based on the user's audiogram and the selected context. Users can then fine-tune these settings or save them as-is.

Activate

With location-based suggestions, users receive prompts to activate relevant audio profiles when they arrive at saved locations. This reduces friction and ensures users always have the optimal settings for their environment. Profiles can be activated with a single tap.

Suggest

The always-listening mode continuously monitors the environment and suggests profile adjustments when acoustic conditions change. Users can accept suggestions with a swipe, or let TuneIn automatically adjust in the background. This "set it and forget it" approach was highly praised in testing.

Reflections & Learnings

This process strengthened my ability to conduct user testing and extract the relevant information to improve our product with each prototype. This is the most robust prototyping process I have participated in and the final product is something that I am extremely proud of.

User Testing Excellence

Conducting multiple rounds of testing with real DHH community members taught me how to listen for what users need versus what they say they want. Each iteration brought us closer to the right balance.

Design Systems & Branding

Building a comprehensive design system from scratch helped me understand how to create cohesive, scalable interfaces that maintain consistency while allowing for flexibility.

Accessible Design First

My greatest takeaway is the value of accessible design and learning how to incorporate accessibility into every aspect of UI/UX design—not as an afterthought, but as a core principle from day one.